The Science and Bias Behind AI

Aug 10, 2022

Bias and discrimination are embedded into computer programs that companies use to determine everything from who is selected for a job interview to who gets medical treatment, who gets targeted by payday lenders and who gets the low interest rate credit offers, who gets mistakenly targeted by police and who gets to interact with the software at all. Because we think of computers as unbiased, too many of us assume that if a computer program is handling the information instead of a human being, it must be fair. We are totally wrong.

Joy Buolamwini is doing groundbreaking work to identify and reduce racial and gender bias in algorithms, or artificial intelligence (AI).

Joy Buolamwini. Photograph by Naima Green

Darkness is Invisible

As part of her graduate studies at MIT, Buolamwini had a class assignment to read science fiction and build something inspired by the reading that she said, "would probably be impractical if you didn't have this class as an excuse to make it." She tried to create what she called an "inspire mirror." She would look in it each morning and people and things that inspired her would be superimposed over her face - a lion, Serena Williams.

But the facial recognition software that she needed to make the mirror work wouldn't recognize her face - until she put on a white mask. Other robots recognized her lighter-skinned classmates faces, even faces she drew on her hand - but not her face. Her research showed that the algorithms were biased. Buolamwini shifted the focus of her master’s degree to analyzing systems that analyze faces. You can check out a short video summary of her project Gender Shades.

Invisible Bias

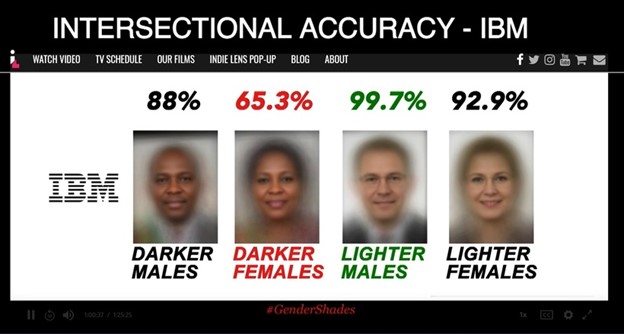

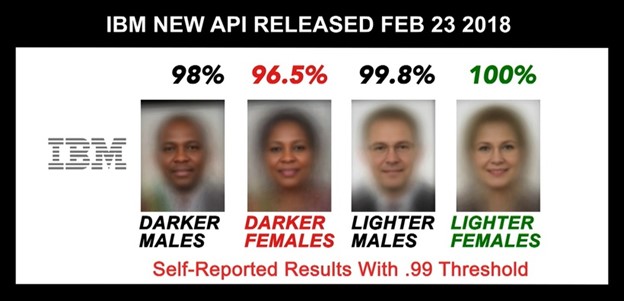

It makes sense that algorithms are skewed because if the people creating them are mostly from one demographic, then they will create algorithms based on their limited view. Most facial recognition software is accurate for white men, but less accurate for men of color. These algorithms are not nearly as accurate for women in general and are appallingly inaccurate for darker-skinned women. The programs also don't recognize nonbinary faces. When she found the faces that the developers used to teach the artificial intelligence program what to look for, about 90% were white men, so that's what the computer recognized.

It would be bad enough if the algorithms only applied to face recognition used for games and school projects, but these programs are being deployed around the world for law enforcement, credit scoring, and other uses that impact people's lives.

Buolamwini looked at programs by every software manufacturer she could find and it turned out that ALL of them recognized white male faces better than any other demographic. For example, Amazon's facial recognition program scored 100% on white male faces, 98.7% on darker male faces, 92.9% on white female faces, and just 68.6% on darker female faces. And that is a HUGE concern because Amazon wants to sell their software to law enforcement, including the FBI. Rather than try to fix the bias in their algorithm, Amazon attacked the research and tried to discredit the women who did the research.

The Danger of Invisible Bias

Buolamwini’s research and social justice activism was the inspiration for the documentary Coded Bias (available on Netflix and PBS). In the movie, women (mostly) sound the alarm about the appearance of impartiality covering for egregious inequity.

Meredith Broussard, author of Artificial Unintelligence, explains that most people think of artificial intelligence (AI) as computers learning to behave like humans, the way they do in sci-fi movies. What we actually use is "narrow AI," which is just math. It distills intelligence down to being able to process problems and win games, but human intelligence is so much more than that!

Cathy O'Neil, author of Weapons of Math Destruction, worked for Wall Street and saw first-hand how algorithms were "being used as a shield for corrupt practices." She defines an algorithm as "using historical information to make a prediction about the future." The underlying math isn't racist or sexist, but the data embedded in the algorithm too often is skewed. Her concern is the power dynamic. The people who own the code control who gets the good credit card offers and who gets targeted with scam offers that will put poor people further in debt. And there's no way for those of us being targeted to appeal or even know what we don't see.

Virginia Eubanks, PhD, author of Automating Inequality, pointed out that while we tend to think that rich people get access to technology first, that's often wrong. The "most invasive, most punitive, most surveillance-focused tools" are forced on poor and working communities first. Atlantic Plaza Towers in Brooklyn, NY wanted to install face recognition software as a security measure, forcing residents to use their faces to enter the building instead of keys. The landlords didn't want to install the software at their high-end apartments; they wanted to experiment on their poorest tenants.

Standing Against Injustice

Buolamwini met with the residents and spearheaded their movement to stop biometrics being installed in their apartment building. She even testified before Congress. (There's a clip in the movie of representatives Alexandria Ocasio-Cortez and Jim Jordan asking questions and agreeing that bio-metric surveillance is intrusive.) The residents won for now.

Safiya Umoja Noble, PhD, author of Algorithms of Oppression, talks about people being "optimized" for failure through targeting. The 2008 financial crisis was an "algorithmic game that came out of Wall Street," and nearly 4 million Americans lost their homes. The housing crisis created the "largest wipe-out of Black wealth in the history of the United States."

The Algorithm Did It

Algorithms affect more than law enforcement and housing. United Health Care came under fire when their algorithm prioritized medical care for healthier white patients over sicker Black patients. Algorithms can even decide which parolees are high risk, how often they have to meet with their parole officers, and whether it needs to be in-person. The algorithm does NOT take into account individual behavior or accomplishments, so guess which demographic is punished more and which is given fewer hurdles. Algorithms have been used to decide which teachers get tenure and which get fired, but they don't take into account supervisory reviews. The results are just off the wall.

In 2010, Facebook targeted 61 million people with an ad to get out the vote. One version basically said, "Vote." A second version added photos of the target's friends at the bottom with the number of their Facebook contacts who had already voted. They ran the ad just one time, and influenced 300,000 people to go vote. And the only way we know about it is because they told us. The 2016 election was decided by about 100,000 votes. There are no laws regulating how Facebook uses algorithms to influence its users.

Coded Bias shows how Big Brother Watch in the UK is fighting against facial recognition and how it is used by police to harass people. We also get a glimpse of how facial recognition and other algorithms in China are embedded in everything, creating a social credit score for each resident. They use facial recognition to shop, ride trains and planes, as an apartment key - and algorithm scores are so ingrained in society that Wang Jia Jia said she likes that she can look at someone's score and know if they are trustworthy instead of relying on her own judgement. I immediately thought that program doesn't measure trustworthiness; it measures compliance. The Chinese social credit score not only effects the person it belongs to, it can affect anyone they are connected to, so there's a huge incentive to obey the government. Amy Webb, author of The Big Nine, said, "The key difference between the United States and China is that China is transparent about it."

Wins for Equity

Since these women have been fighting for justice (Buolamwini started the nonprofit Algorithmic Justice League), some things have gone well.

- IBM invited Buolamwini to their headquarters, replicated her experiment, and then improved their code

- In June 2020, Amazon announced a 1-year pause on police use of its facial recognition technology, Rekognition. In 2021, they extended the ban on police usage of the software "until further notice" after IBM halted the use of their facial recognition systems and condemned the use of facial recognition "for mass surveillance, racial profiling [or] violations of basic human rights and freedoms."

- Lawmakers introduced legislation to ban federal use of facial recognition technology in June 2020, but it hasn't been passed, yet

- In May 2019 San Francisco, CA became the first city in the U.S. to ban facial recognition technology followed by Summerville, MA, and Oakland, CA

- Other states have banned or limited facial recognition or the collection of other biometric data in specific situations

- Buolamwini has partnered with companies like Olay to #DecodeTheBias by exposing girls of color to coding and encouraging women of color to pursue careers in STEM

There's still no federal regulation for algorithms or oversight on how AI can be used. The people using them don't care much about accountability. After all, the negative outcomes won't affect them. I wish Congress would take this seriously and protect the residents of this country BEFORE something as bad or worse than the housing crisis happens again. It really pisses me off that Wall Street created the algorithm that targeted people to buy houses at rates they couldn't afford, and the banks got bailed out, but not the people who were intentionally scammed and lost their homes.

Breathe and make equity a habit

This is a lot to take in if you didn’t already know about it. Bias and injustice is everywhere, even when we can’t see it. Remember to breathe, get centered in your body. These systems weren’t put in place overnight, and we won’t undo them overnight. That’s why I encourage everyone to make equity a habit instead of a goal. When it’s a habit, we bring that energy of creating space for marginalized groups and having these conversations into every space we occupy.

If you're not a member of Inspired by Indigo, join the community to work together as we build the skills to make equity a habit:

- self-care because healing is a form of protest

- know your facts to undo myths and lies

- undo the work - what we've learned (and lived) about race, oppression, and privilege

- rest and celebrate to avoid burnout and disillusionment

Sending fierce love!

Ready to DO something right now? Download the Everyday Activism Action Pack and get started today.

We hate SPAM. We will never sell your information for any reason.